Under the Hood of Fit3D 3D Body Scan Processing

3D body scanning is amazingly complicated and we spent the past decade making 3D body scanning as simple as possible for our customers and their members. We hide much of the heavy technology behind the scenes and we thought we'd publish a blog post highlighting what actually happens when a user takes a Fit3D body scan.

Here goes. 🧐

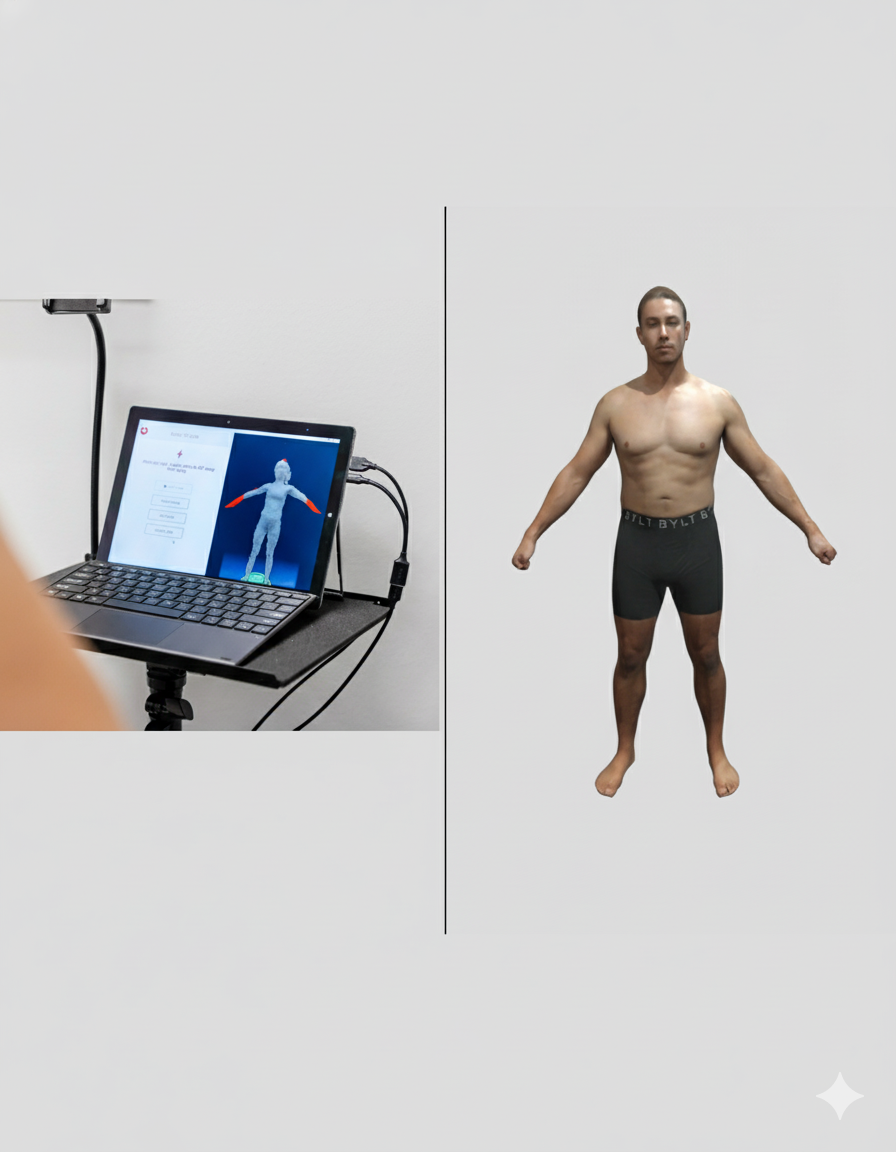

The end-user takes a Fit3D scan

The user will authenticate their Fit3D account by logging into Fit3D on the tablet attached to the scanner. Then, the user will receive scan prompts to clearly tell them how to best take the scan to get the best results.

After the scan instructions the scanner will capture weight and balance from the user.

When this is completed, the user will lift the handles, press the buttons, and the scanner will spin the user 400 degrees, while capturing depth images from the 3 cameras on the Fit3D camera tower.

When this process is complete, the user can choose to share her data with a coach at the facility through the Fit3D scanner application, the user will close the scanning session, and the scanner will reset itself and get ready for the next user.

As the scanner is resetting, it compresses the over 1,200 depth images just captured, encrypts them, and uploads them to the Fit3D Cloud, which we'll go into next.

While the Fit3D Cloud is processing the data, the scanner is ready to use by the next end-user.

The Fit3D Cloud

The Fit3D Cloud processes the raw depth images into scans, measurements, posture, and wellness metrics. We'll talk about how this happens in the next section.

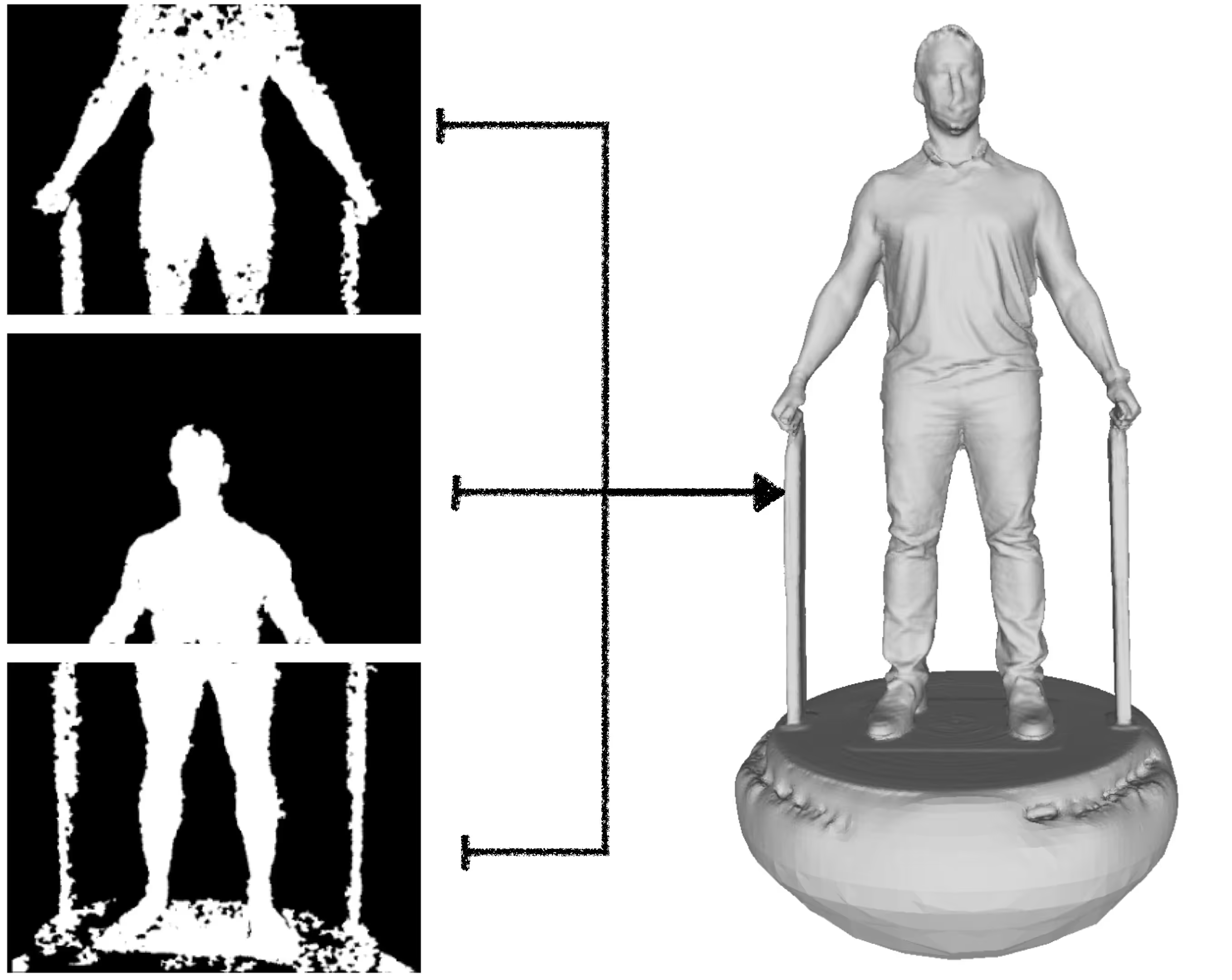

Turn the depth images into a point cloud / mesh

The Fit3D software uses very complex computer vision and machine learning features like SLAM, ICP, and Point Set Registration, to register the depth images from the Fit3D cameras and turn them into a point cloud / mesh. This work is done on physical servers owned by Fit3D that use very expensive GPUs to process data.

At this point, the mesh includes the entirety of the background, the scanner platform, and other objects in view. It basically builds a 3D representation of your scanning room from the cameras view point.

For this process to successfully complete, the end-user will need to have stayed still during their scan and there can be no infrared light shining on the subject or into the camera sensors.

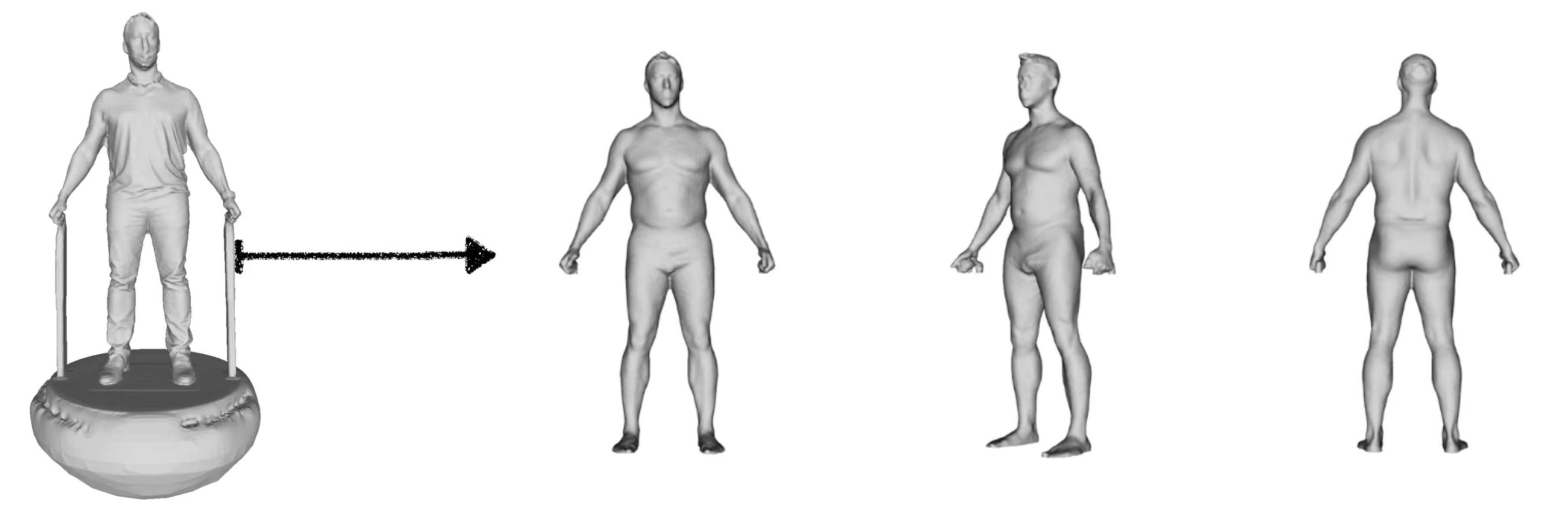

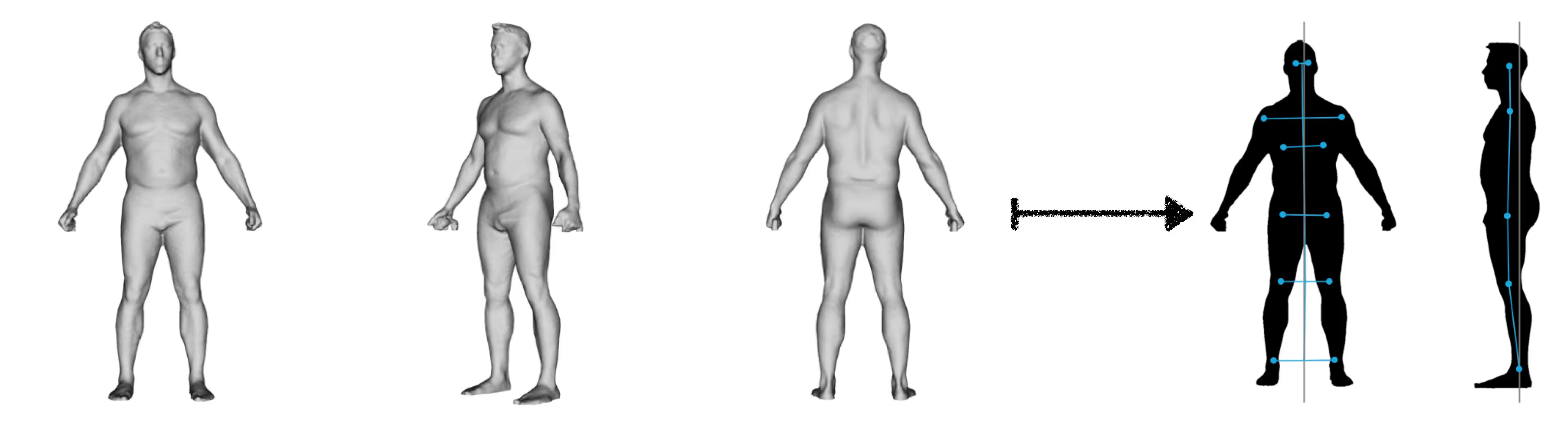

Clean the non-human features from the mesh

The Fit3D software then uses computer vision techniques like masking and image segmentation to separate the user from the scan platform, handles, and background. This technology is pretty trivial for 2D images these days, but it is still very complicated for 3D scenes.

When this step is completed, the 3D mesh will be the subject alone without the background.

For this process to complete successfully, there can be no objects too close to the scanning turntable or the end-user during the scan capture process.

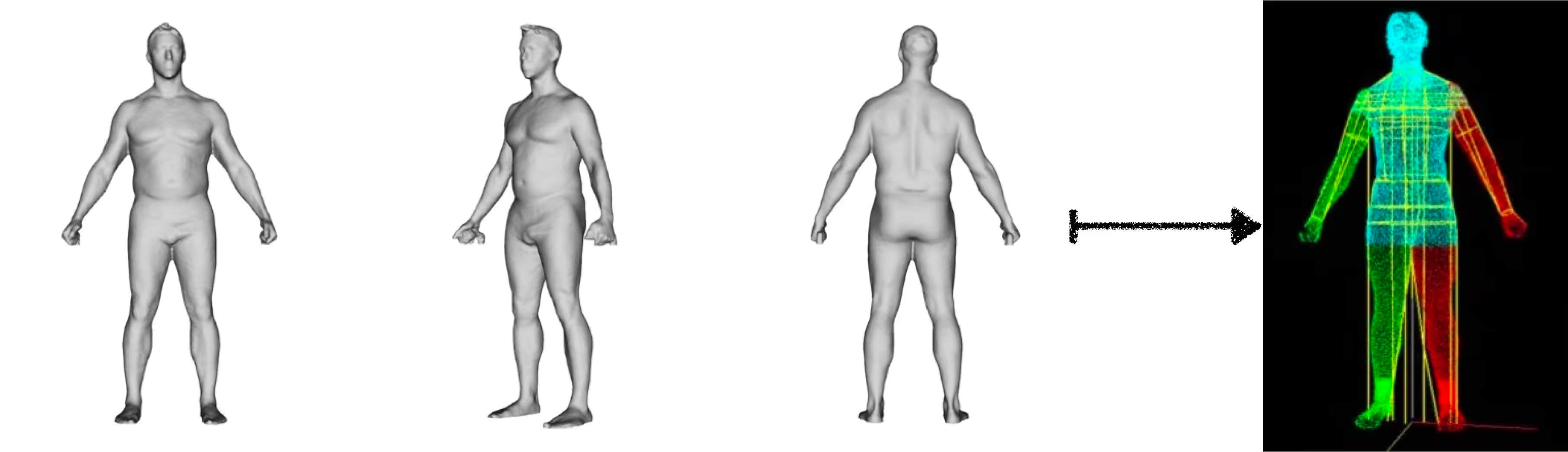

Extract measurements from the scan:

The Fit3D software then runs a separate series of machine learning and computer vision algorithms to detect specific features (IE. feature detection) such as the end-user's chin, shoulders, elbows, wrists, hips, knees, ankles, etc. The software identifies features that are similar across multiple variations of body types.

Following the initial feature detection our software looks for sub-features as offsets to the major features. These will less defined and more variant across body types. These sub-features include more meaty parts of the body like the biceps, forearms, waist, thighs, calves, etc.

When all of these features are detected, the software begins to take measurements on the mesh by utilizing computer vision techniques like Convex Hulling. At the end of this step, the system will have created hundreds of measurements, including length, contour, circumference, sagittal, depth, surface area, and volumetric measurements.

For this process to complete successfully, end-users must closely follow the scan directions. If hair is down, there is too much movement, or improper clothing is worn, it can mess up all of the captured measurements. These measurements are also the main input to the wellness algorithms, so ensuring that proper scan guidelines are met during a scan is extremely important.

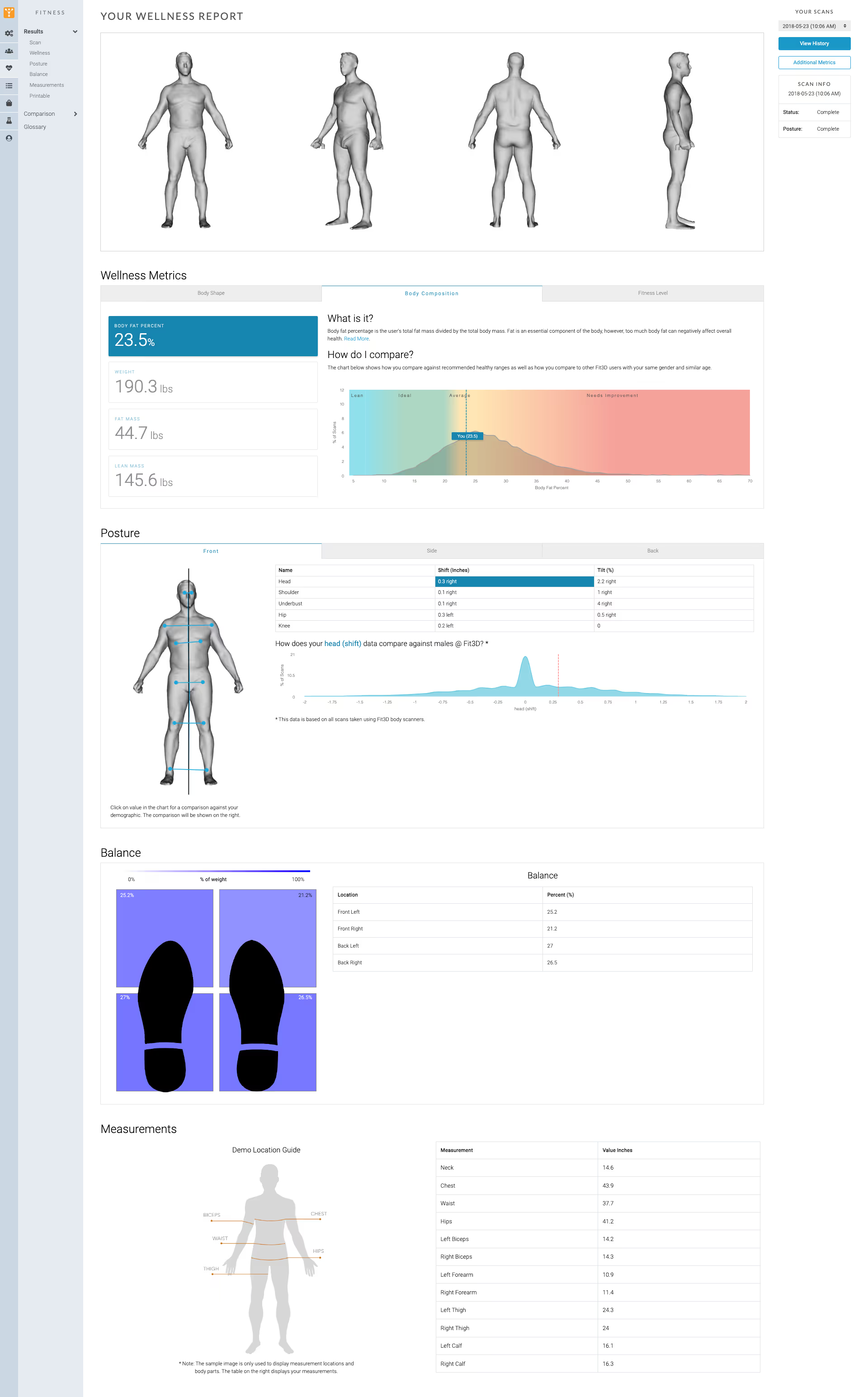

Process wellness assessments from the measurements

Fit3D worked with leading research institutions like Mount Sinai, UCSF, LSU, Harvard Medical, and many others to develop our wellness metrics, like Body Shape Rating and body composition.

To build the comparative dataset, we partnered with institutions that housed DEXA units and we captured body composition and bone density from DEXA units and then had subjects take Fit3D body scans in the same session. We correlated the data to produce an algorithm that predicts body composition based on the Fit3D body measurements.

To put our body composition dataset size into perspective, tens of thousands of subjects took Fit3D and DEXA scans in the same session. Most other companies doing body composition research use less than 100 comparative subjects to build their algorithms.

Another fun fact about body composition is that all solutions including MRI, DEXA, BIA, air displacement plethysmograph (Bod Pod), dunk tanks, skin calipers, etc., use algorithms to predict lean body mass and fat mass. The only true way to get lean mass vs. fat mass is to autopsy a cadaver immediately after death (to take into account water) and weight the various mass types. ALL body composition devices use some type of algorithm to get lean mass and fat mass... which is a common misconception.

We know our body composition algorithms are not perfect, but they are as good as it gets.

Process posture from the scan / measurements

Finally, we do one more computer vision / machine learning step and look for the features required to do posture analysis. This is very similar to the step where we extract measurements from the scan, but we're looking for joint features, eyesockets, ears, etc.

With the 3D landmarks of these joint features, we determine the shift and tilt offsets from center line for each scan. This is the data behind our posture analysis module.

Making Data Available to End Users, Coaches, and Admins

After all of the aforementioned steps are complete, all of the end-user data is separated from any identifiable data and is written to Fit3D Cloud databases.

Then we send the end-user and email and let her know that her scan is ready to be viewed at dashboard.fit3d.com. From there, end-users and coaches can review their scans and wellness metrics as well as compare results from previous scans.

3D body scanning is a terribly complicated science and there are many areas where challenges can arise. From our understanding, Fit3D has the highest successful scan completion rate of any consumer operated 3D scanning system and we are very proud to have obfuscated the complexities of scanning so that we can provide our customers and their members with a great experience.

To learn more, feel free to reach out to our amazing sales team by filling out a lead form here.